Great Expectations

Over the last two years, artificial intelligence has captured investors’ attention as the main driving force for technology markets. Whereas debates around names like Nvidia, AMD, or Super Micro were previously limited to technology geeks and IT circles, they have now become household names as their stock prices soared and garnered media attention.

The key to the growth has been the ongoing arms race as large tech companies bet big on AI and spend aggressively on the necessary infrastructure to power these solutions. Capital expenditures at Microsoft, for example, rose 66% year-over-year to $11 billion while Alphabet’s capex practically doubled to $12 billion in the latest quarter.

The euphoria is by no means limited to technology companies either. In 2023, AI was mentioned over 30,000 times on earnings calls as CEOs and CFOs sought to weave the technology into their product roadmaps and narratives.

Below is a snapshot of IDC’s latest views in three key areas of the AI value chain:

1: AI in Semiconductors

To date, much of investors’ attention has been concentrated on the semiconductor players involved in the AI value chain – and for good reason.

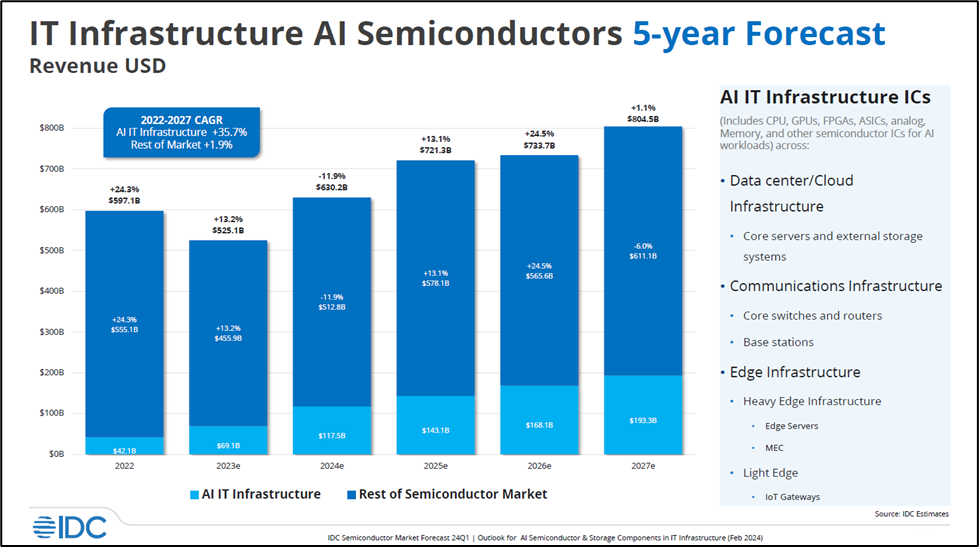

In 2022, IT Infrastructure for AI semiconductors was just $42.1 billion. By 2023, IDC estimates it had risen 64% to $69.1 billion and by 2024 we expect the market to accelerate by 70% to $117.5 billion. By the end of 2027, we forecast that IT Infrastructure for AI semiconductors will reach $193.3 billion representing a 5-year CAGR of 35.7%.

The chart below shows CPUs, GPUs, FPGAs, ASICs, Analog, Memory, and other semiconductor ICs that support AI workloads across the datacenter and cloud infrastructure. It also includes communication infrastructure such as switches and routers.

While a lot of the growth to date has occurred within the datacenter for AI training, we expect the next wave of growth to come as networks continue to virtualize and as AI is infused into network infrastructure workloads. After which, we expect the edge to represent the next big opportunity as billions of connected devices at the edge will double in volume by the end of our forecast period, which will generate a large part of the data that is driving AI inferencing.

For a deeper dive into the topic, watch IDC’s 2024 Semiconductor Market Outlook webinar on demand here.

2: AI in Servers

Looking at the overall server market, we see two diverging trends playing out.

In 2023, global shipments of servers declined 19.4% to 12 million units as macroeconomic pressures continued to slow enterprise investments. However, over the same period, average selling prices or ASPs rose 37.1% to $11,376 as GPU-server growth soared from hyper-scalers and large cloud providers expanding their GPU deployments – largely driven by AI demand. The rise in ASPs offset the lower volumes leading the total market to grow 10.5% to US$136 billion.

Homing in specifically on AI servers, IDC estimates that the segment comprised approximately 23% of the total server market in 2023 and will increasingly represent a larger portion of the market going forward. By 2027, we forecast the AI server market’s revenue to reach $49.1 billion with a key assumption that GPU-accelerated server revenue will continue to increase share among all acceleration types.

“For clarity’s sake, IDC defines AI Servers as servers that run one or more of the following applications:

- AI platforms: Software platforms dedicated to AI application development; most AI training occurs here.

- AI applications: Applications whose prime function is to execute AI models; most AI inferencing happens in this category.

- AI-enabled applications: Traditional applications with some AI functionality.

This means IDC’s definition includes servers that run AI with the support of a coprocessor, such as a GPU, an FPGA, or an ASIC, referred to as “accelerated servers”, including servers that run AI on the host processors only, referred to as “non-accelerated servers”.

3: AI in Devices

While much of the AI workloads today occur in the cloud and datacenters, there are also compelling arguments for running AI locally on devices such as PCs and smartphones. This is for three primary reasons:

• Privacy and security: Enterprises concerned with uploading sensitive data to a public cloud may instead store it locally on their own devices to retain more control.

• Cost savings: It can be more cost-effective over the lifetime of a device to run AI workloads locally to limit the need to access expensive cloud subscriptions or resources.

• Performance and latency: Running AI on-device can eliminate the round trip that workloads make between the cloud and back over networks.

AI PC Market

While the market is still relatively nascent, IDC expects AI PC shipments to begin ramping up in 2024 and become pervasive by 2027. Our Worldwide Personal Computing Device Tracker forecasts 54.2 million AI PC units shipped in 2024 representing 21% of the total PC market. By 2028, we expect this proportion to reach almost 60% of the market at 166.4 million AI PC units.

Notably, we expect that the commercial segment will lead the AI PC market growth as businesses pilot the technology and identify the most pressing use cases.

AI Smartphone Market

Most smartphones today already have some degree of AI capabilities integrated, especially around photography. What IDC defines to be “next-gen AI smartphones” are devices with a system-on-a-chip (SoC) capable of running on-device Generative AI models more efficiently leveraging a neural processing unit (NPU) with 30 Tera operations per second (TOPS) or more performance.

In 2024, our preliminary estimate is for 170 million AI smartphones to be shipped comprising about 15% of global volumes. This represents a more than 3x increase from 2023 shipments, and we expect the share of AI smartphones to continue increasing as more concrete use cases emerge.

We discuss our forecasts in more detail in our AI Devices webinar here.

Looking Ahead: AI Use Cases and Monetization

As the industry continues to evolve, AI will become pervasive across mediums and deployments. While much of the heavy lifting and training will continue to occur in the cloud, more inferencing can be expected to happen on devices and at the edge. This makes it especially critical for investors to track the ways and pace that AI is dispersing across the value chain.

Importantly, to sustain the momentum that has already occurred in capital markets, two things need to happen:

• Use cases for AI must deliver on their promises of enhanced productivity and efficiency. While increasingly fast and powerful hardware continues to be created, what solutions those new capabilities unlock and what “killer apps” eventually emerge is far more important.

• Monetization paths need to be clear and achieved at a pace that investors deem acceptable. While investors have largely been accepting of skyrocketing capital expenditures to date, increasing scrutiny will likely occur as shareholders await the uplift in revenues to assess returns on investment.

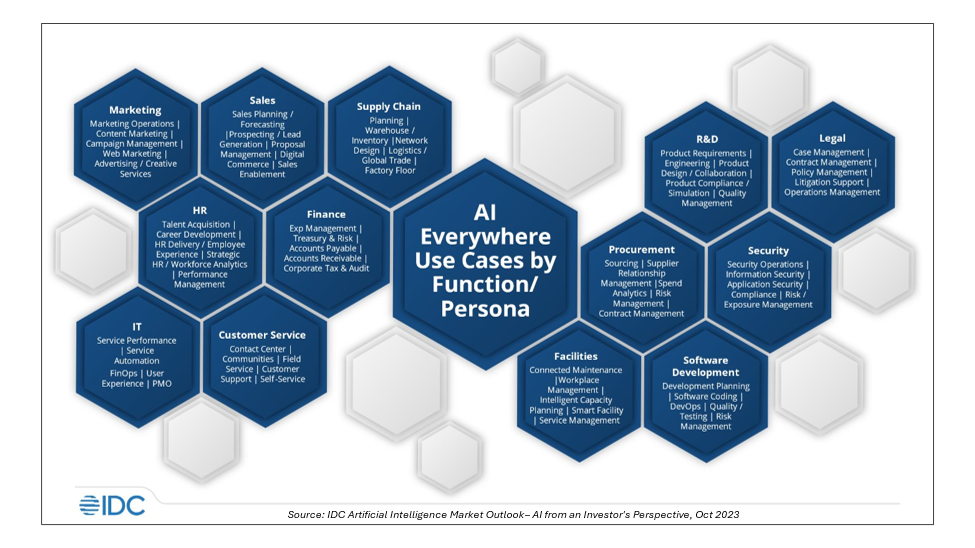

While certainly not exhaustive, below is a snapshot of current AI use cases:

To learn more on how you can make IDC an extension of your research process in making sound investment strategies, explore IDC Investment Research and sign up for the IDC Tech Investor Newsletter.

Discover how IDC’s AI Use Case Discovery Tool can elevate your AI strategy—learn more here.