Back in 2019, IDC’s security and trust team wrote about the potential of artificial intelligence (AI) in cybersecurity. At that time, the approach was to use AI to create analytics platforms that capture and replicate the tactics, techniques, and procedures of the finest security professionals and democratize the unstructured threat detection and remediation process. Using large volumes of structured and unstructured data, content analytics, information discovery, and analysis, as well as numerous other infrastructure technologies, AI-enabled security platforms use deep contextual data processing to answer questions, provide recommendations and direction, and hypothesize and formulate answers based on available evidence.

The goal at that time was – and still is – to augment the capabilities or enhance the efficiency of an organization’s most precious and scarce cybersecurity assets — cybersecurity professionals. The approach to development typically begins with the mundane and remedial and gradually graduates to increasingly complex use cases. Essentially, machine learning allows cybersecurity professionals to find the malicious “needle” in a haystack of data.

Use Cases for AI/ML Today

With the release of ChatGPT in November 2022, we are seeing increased excitement around all things AI and the application of AI technologies to enable secure outcomes. The greatest interest is in generative AI, but AI in security is hardly new. Machine learning, a form of AI that has been used in security for more than a decade, was used to generate malware signatures before algorithmic protections became the rage.

One long time use for machine learning has been behavioral analysis of users and entities (UEBA) to identify anomalous behaviors. This includes the configuration, applications, data flows, sign-ons, IP addresses accessed, and network flows of the devices in the environment. For example, does the device usually call out to another device? If not, an alert may be generated to have an analyst look into the unusual behavior.

Many vendors include UEBA as part of their security information and event management (SIEM) with alerting on the anomalies. For example, some SIEMs use ML models to detect domain-generated algorithms (DGA) that are used in DNS attacks.

Use Cases for Tomorrow

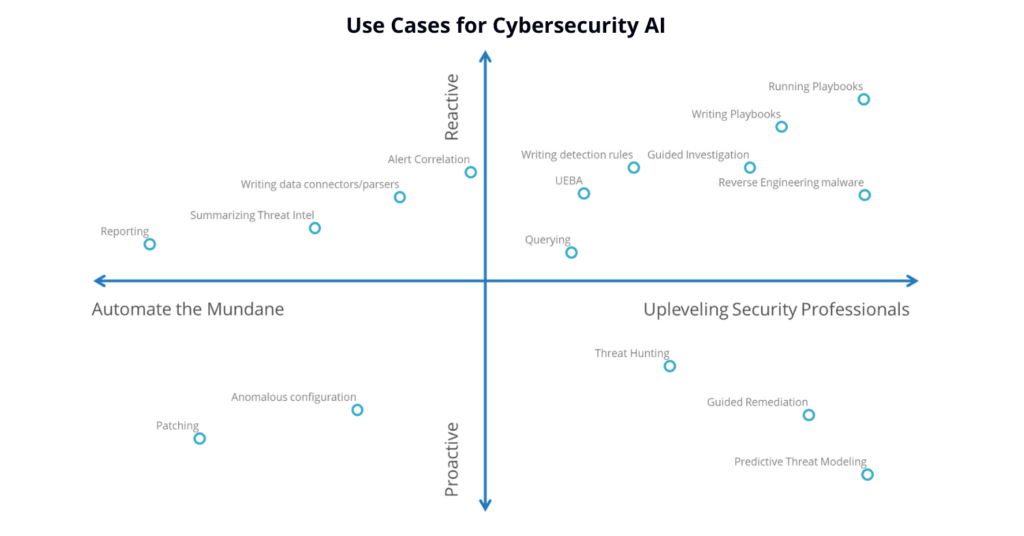

Vendors envision using AI for the thankless security tasks and saving humans from narrowly defined manually repetitive tasks, so they can pivot more quickly into investigating complex issues the machine does not understand. AI will not recognize what it has not been trained to see, so all new tactics and techniques will require human input.

Generative AI can easily translate from one language to another – spoken or machine language – which includes translating natural language queries into the vendor-specific languages needed to conduct the search in other tools. Today, SIEM vendors often use rules to correlate alerts into incidents that present more information to the analyst in one place.

AI will be trained to produce the context around an alert so analysts do not have to spend as much time on investigations, such as checking with an external service that can then label which domains are malicious. AI will handle investigations more efficiently, as well as prioritize which alerts should be handled first.

If trusted by the organization, AI may suggest or write playbooks based on regular actions taken by analysts. Eventually AI may be trusted to execute the playbooks, as well. Generative AI can recommend next steps using chatbots to provide responses about policies or best practices. One use may be a higher-level analyst confirming recommended actions for junior-level analysts. Eventually, organizations will use their own security data and threat pattern recognition capabilities to create predictive threat models.

Other uses for generative AI include:

• Generating reports from threat intelligence data

• Suggesting and writing detection rules, threat hunts, and queries for the SIEM

• Creating management, audit and compliance reports after an incident is resolved

• Reverse engineering malware

• Writing connectors that parse the ingested data correctly so it can be analyzed in log aggregation systems like a SIEM

• Helping software developers write code, search it for vulnerabilities, and offer suggested remediations

Moving Forward

The goal with AI has always been to improve the efficiency of the security analyst in their work of defending an organization against cyber adversaries. However, the cost of AI models and services may be too high for some organizations. Relying on AI to guide analysts and report on security events will only take off if the models are trustworthy.

The data used to train the model must be accurate or the AI-driven decisions will not have the desired effect. The terms confabulation or hallucination are being used to describe when a model is wrong because the models are trained to give some answer instead of saying I don’t know when it does not have an answer. The industry needs to avoid AI bias which occurs if the training set chosen is not diverse enough.

Customers and AI suppliers should understand the underlying data behind each decision so they may figure out if training went wrong and how models need to be retrained. And, vendors must protect the data used to train the models – if the data is breached the model could, for example, be trained to ignore malicious behavior instead of flagging it. Additionally, customers must have guardrails to ensure that they keep proprietary data out of public models.

Vendors will also need to check for drift with their models. AI models are not something that can be set up and forgotten. They must be tuned and updated with new information. Researchers and other cybersecurity vendors can turn to MITRE’s Adversarial Threat Landscape for Artificial-Intelligence Systems (ATLAS) for help in better understanding the threats to machine learning systems and for tactics and techniques – similar to those in the MITRE ATT&CK framework – to address and resolve issues.

Interested in learning more? Join us for a webinar on May 31st – Unlocking Business Success with Generative AI.