The coming of age of the internet revolutionized the pace of innovation, value creation, and monetization. Today, it is not a farfetched assumption that over half of the world’s 7.8 billion population is connected to the internet. Access to the internet is considered a basic human right, much like access to food and water. For example, although electricity is scarcely available in parts of the sub-Saharan region, it is normal for residents to own a phone and pay by the hour for charging it to stay connected.

Users around the world leave behind digital footprints with every small interaction in this connected world and are required to share personal data with the primary provider. They are presented with verbose, opaque, and incomprehensible privacy policy notices that they must consent to either willingly, inadvertently, or even unknowingly. In doing so, enterprises, ecosystem partners, and users have come to believe that in the digital world, harvesting, aggregating, and trading user data is normal and even unavoidable. Technology and ecosystem partners engaged with the primary provider are likely have access to this personal data to varying degrees. The goal is to programmatically understand user behavior in a way that digital content can be hyper-localized for greater consumption, leading to increased profitability. In doing so, data privacy is often overlooked, disrespected, and exploited to influence digital behavior to suit one’s own business.

Why is Data Privacy so Hard a Nut to Crack?

Data privacy is a broad and loosely defined term across various types of organizations, industries, and countries around the globe. This is the primary challenge. Data privacy is often confused with data security. Data security is about protecting an organization’s intellectual property and keeping it safe, whereas privacy is about what personal data should organizations collect, store, use, and protect.

Data privacy is a different matter. Internet and software service providers — mainly technology giants and governments — have faced severe backlash for not respecting digital users. Commercial organizations have been accused of committing the cardinal sin of monetizing user data, and governments have been blamed for doing very little to control it. The user appears to be the weak link. They are at a disadvantage, not just as individual customers, but also as a society, due to a limited understanding how their personal data will be used — often in a way that exploits their fundamental privacy.

The other challenge is that digital innovation has outpaced the abilities of regulatory bodies to implement data privacy and protection regulations in a balanced and flexible manner. While some countries have powerful regulatory mechanisms in place, others have outdated legislation or none. In the United States for example, many of the current federal privacy laws predate the technologies that raise data privacy issues. While governments and regulatory bodies understand that the digital world has latched onto the practices of collecting and mining personal data, it is apparent that clawing out of this mess with timely and appropriate regulations to control this behavior is difficult to say the least.

A simple solution would be to implement a one-size-fits-all privacy policy across industries and states, but it is also understood that this would severely hamper innovation and the beneficial uses of data. And while the technology industry has often opted for industry-based-self-regulation to address this matter, it has been inadequate in many cases, promoting monopoly and with the potential to disfavor those parties (users) not included in the “self”. But the key question is, if users consent to the provider’s privacy policies of harvesting, aggregating, sharing, and using personal data, why should anyone care? Because of the following reasons.

A Social Dilemma Cannot Become a Social Catastrophe

Recent years have shown an increase in high-profile data privacy violation reports against name-brand companies such as Google, Facebook, Marriott, Zoom, etc. While some of the data privacy violations stemmed from cybersecurity incidents, others were an outcome of deliberately harvesting and using sensitive personal data to maximize the return on the investment of capital, resources, and work. Unfortunately, these business decisions have impacted individuals and communities in unimaginable ways resulting in (but not limited to) the following:

- Manipulating individual online digital activity and personal preferences

- Taking advantage of the vulnerable (minors, etc.) members of society

- Influencing cultural norms through social engineering and increasing a sense of polarization

- Spreading fake news, enabling creation, and spread of inflammatory content that can incite violence

- Blurring personal and professional boundaries through use of social media

- Incurring potential financial losses stemming from data breach due to a cyber breach, etc.

The outcome is a serious erosion of trust between the users and the provider.

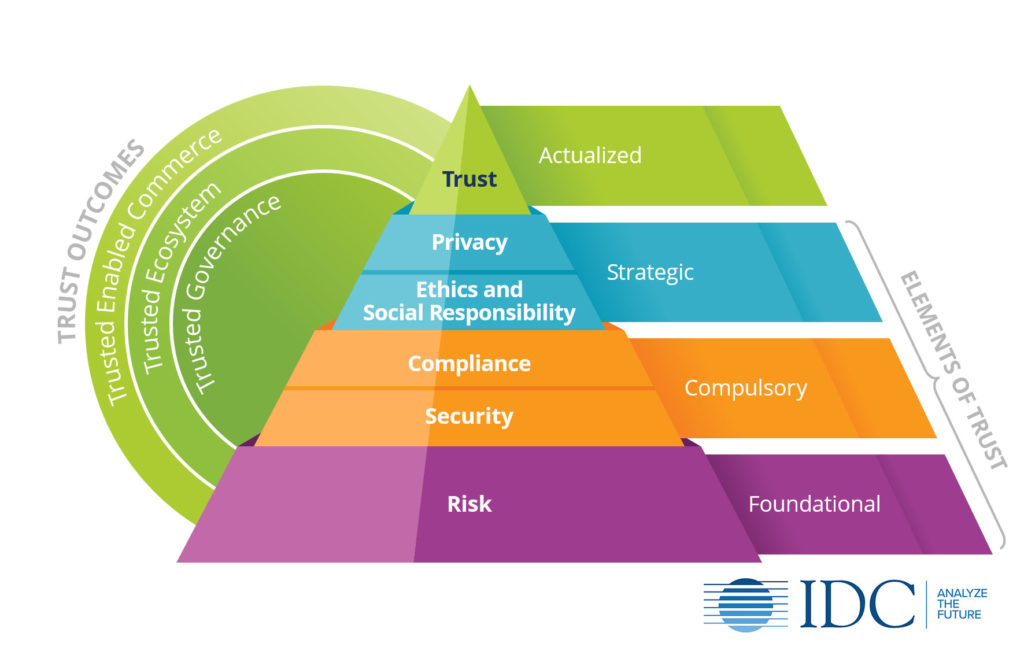

Recognizing this, IDC includes privacy and social responsibility & ethics as two strategic pillars of the Future of Trust (as seen in the figure below) creating positive trusted outcomes.

Affecting Trusted Outcomes with Core Intrinsic Values

We must recognize that the ultimate power rests in the hands of a few tech giants. It is for these companies to develop core intrinsic values that will set the stage for the data privacy policies of the future. A strong commitment to privacy and stringent internal policies enable a good relationship with not just customers and ecosystem partners but also with auditors and regulators.

In its upcoming Worldwide Social, Environmental Responsibility & Ethics (SERE) 2021 Taxonomy, IDC defines corporate responsibilities in 4 main categories for which organizations must affect change with core intrinsic values: 1) governance, 2) individual, 3) community, and 4) environment. The SERE cycle (see figure below) incorporates 6 Ps – people, purpose, plan, policy, process, and performance that will support various initiatives in the 4 categories while strengthening an organization’s brand, reputation, trust, and profitability.

Three sub-categories under governance (as stated below) highlight ways in which organizations can commit to and deliver on the promises made towards the wellbeing of individuals and communities by implementing stringent data privacy policies:

- Ethical Actions & Transparency are the bedrock of good corporate governance. The fundamental idea is, if an organization stands for robust practices of ethical actions and transparency, it will draw like-minded partners, thus building a trusted ecosystem. Further, by not concealing its actions and corporate practices, the global suspicion of what goes on “behind closed doors” is diminished or eliminated. Sample initiatives related to data privacy include:

- Minimize data collection to required amounts needed to deliver a given service/product.

- Provide users with a concise and clear explanation of how their data is used/shared and how they can control it.

- Legal Liability is a result of corporate actions or omissions that are the cause or proximate cause of physical, financial, or emotional damages to another corporation or individual. These actions or omissions create the obligation for culpability in either/both a criminal and/or civil context. Sample initiatives related to data privacy include:

- Strict adherence to regulatory compliance.

- Ensure security controls are in place to keep data secure and enable authorized access.

- Social Liability is regarded as the perception of corporate actions or initiatives in the eyes of the public. Corporate policies focused on short-term profitability must be weighed against long-term impact on individual, community, and environment. This can only be achieved if a truthful and participatory dialogue permeates the core values of an organization, resulting in greater trust through social legitimacy. Sample initiatives related to data privacy include:

- Increase and solidify fact-checking mechanisms to eliminate potential disinformation.

- Proactively communicate up-to-date and accurate information.

What Do You Think?

As the lead for IDC’s Future of Trust research practice, I invite you share your thoughts on this topic and engage in upcoming research.

The Future of Trust research agenda is packed with thought provoking themes in 2021. Watch for key upcoming research in the coming months:

- Future of Trust 1: A C-Suite View of Risk, Security, Compliance, Privacy, Social Responsibility & Ethics (multi-part)

- The Power of Trust: Measuring ROI

- Trust Centers: Committing to Transparency, Accountability & Good Governance

If you would like to learn more about the Future of Trust or IDC’s other “Future of X” practices, visit our website at https://www.idc.com/FoX