This blog complements an IDC Webinar on 4 March 2021 (register for free) as well an end-user perception and satisfaction study (research starting in Q2 2021) focusing on Responsible AI and Digital Ethics products and services.

Trust, Corporate Citizenship, and the Changing Character of Corporate Sustainability

IDC defines trust as an up leveling of the security conversation to include attributes such as risk, compliance, privacy, and even business ethics. Over the last few decades, the complexity of the term “trust” in a corporate setting has been continuously increasing. Digitalization has contributed to this trend – concerns around data usage and decision-making processes in the context of artificial intelligence (AI) usage, for instance – but also the more normatively driven expectations that various stakeholders have towards a company’s behavior and corporate citizenship. The number of stakeholders itself has grown as well, expanding well beyond shareholders.

In this context, “sustainability” has become a major layer for the assessment of long-term corporate performance in this new stakeholder economy. Companies are no longer simply valued by meeting their (short-term) financial goals, but also by their impact on society and the environment.

This lens is not necessarily a new one. Corporate social responsibility (CSR), business ethics, and philanthropy have been taught in business schools across the world for a long time. Several corporate scandals, philosophical debates about income inequality and other societal issues, and public attention for enterprises’ negative ecological impacts have been picked up in curriculums and caused corporate responses (although mostly addressed in the form of boilerplate statements).

From Friedman to Piketty, a lot of thought has been put into corporations’ roles as profit-oriented, yet responsible entities. But many of the philosophic debates have been looking at companies’ performance in a rather qualitative way, or linked companies’ impact to macroeconomic issues.

Meanwhile, “digital” and “sustainable” have become business imperatives. Technological advancements enable sustainable change, while changes in societal beliefs increase demand for new technologies. Today, companies are looking at sustainability in different ways – non-commercially and commercially:

- Non-commercially (and more tactically) through normatively driven corporate social responsibility (CSR) and philanthropical efforts

- Commercially (and more strategically) through metrics-driven Environmental, Social, and Governance (ESG) approaches that drive sustainable business strategies

The sustainable use of 3rd platform technologies such as AI can be viewed from the two perspectives described above: a CSR and an ESG perspective.

In the context of AI, “AI for Good” initiatives approach artificial intelligence solutions from a “doing good” perspective, which can – if carried out effectively – help companies create purpose amongst their employees. This can ultimately positively contribute to talent attraction, retention, engagement and performance. Such initiatives can also help enhance organizations’ brand value vis-à-vis its customers. However, unlike ESG approaches, CSR initiatives typically focus on selective aspects of the upside, with little effect on risk management or strategic pursue of opportunities for differentiation. “Responsible AI”, on the other hand, addresses the ESG angle, i.e., managing risks and fostering trust.

The Business Case for Responsible AI – Why is This Important?

Products and services addressing Responsible AI and Digital Ethics help organizations reduce data and algorithm bias, increase the transparency and explainability of their AI decision making processes, ensure legal compliance, ethical alignment with their values, and improve overall AI governance. The moral reasons for investing in such solutions is pretty straight forward: which organization, for instance, would want its artificial intelligence systems discriminate against its customers or job applicants or leak sensitive data?

An ESG-focused approach can help identify several risk areas that can hurt an organization’s operational performance and constitute significant financial, reputational and legal risks.

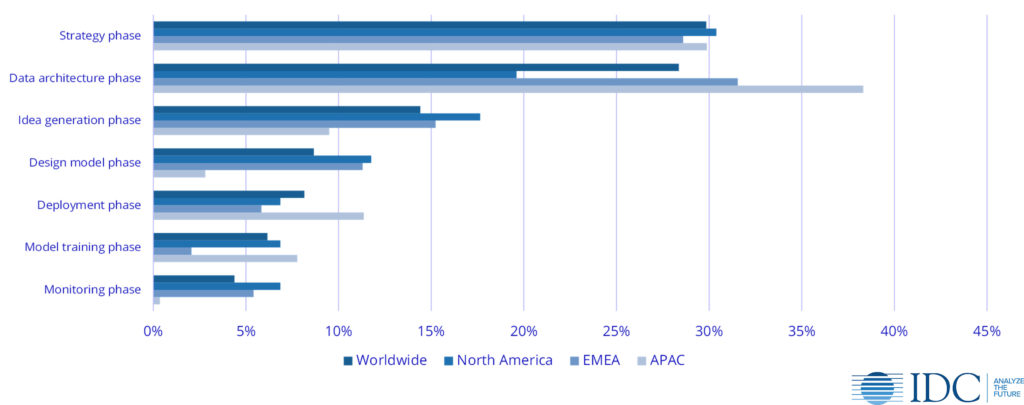

Responsible AI approaches require a comprehensive understanding of the various risks that are associated with the use of AI technology. IDC’s research shows that most end-users are still at relatively early stages of their Responsible AI journeys, highlighted by their need for third-party solutions around topics like strategy development and idea generation.

At what part of the lifecycle of AI systems do you see the greatest need for investments in third-party solutions around Ethics & AI?

Taking an ESG approach ensures that decision makers assess the full spectrum of non-financial issues that impact their business. “Sustainability” is often used as a synonym for “green business” and companies’ focus on climate change and decarbonization. While environmental issues are a major concern and an important pillar of ESG, social and governance-related issues are equally important to consider and highly relevant for creating a trusted enterprise. The increasing level of standardization and formalization of ESG reporting and disclosure makes it increasingly risky for companies to limit their reporting to topics that are particularly “hot” at the moment or that they perform well on.

Applying an ESG Lens

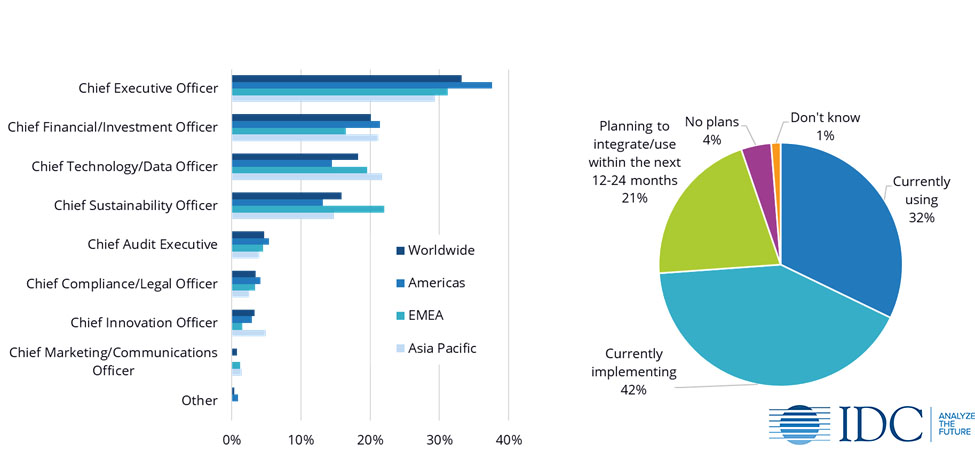

The focus on the impact of enterprise value has made ESG a strategic topic for most companies worldwide, according to a recent IDC survey (see below). ESG has become a CEO-/CFO-level topic, underlining its importance for the entire organization and its implications for risk management as well as differentiation. Almost 75% of companies have already integrated or are currently in the process of in integrating ESG considerations into their business approach. The need for a comprehensive, standardized capture of relevant ESG data requires companies to understand reporting and disclosure standards (often with through the help of a third-party services provider) and benchmarking of ESG performance using ESG ratings.

Responsibility for Sustainability/ESG Implementation and Current State of ESG Integration

But companies are also catering to other stakeholders: employees are increasingly vocal about corporate actions and choose their employers based on their behavior as a corporate citizen. By focusing on the business case for ESG from an employee perspective, organizations can reduce their turnover cost, particularly in the context of younger generations, through improved retention and promotion rates. As more and more members of Generation Z (people born between 1996 and 2010) enter the workforce, their changing wants, needs, and expectations are also influencing companies’ talent perspectives.

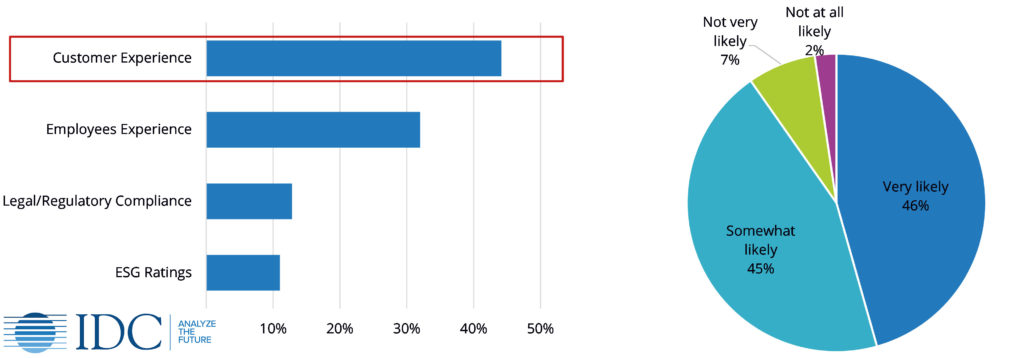

| How impactful do you consider your organization’s ethical AI solutions in preserving each of the following? [1=Not impactful; 5=Very impactful] | How likely are you to work with a business consulting firm over the next 12-24 months to get help regarding your products/services targeted specifically at members of the Generation Z*? |

Source (right): IDC Survey Client Demand for Business Consulting Services Around Sustainability/ESG, 2020; n = 650

*born between 1996 and 2010

Customers are another important stakeholder group to consider, and their expectations and requirements towards a trusted company can differ from, let’s say, investors. While investors tend to assess ESG issues on an industry basis, following ESG standards and frameworks, customers are more focused on brand reputation and rely on other channels than ESG ratings and sustainability reports to form their buying decisions.

The broad spectrum of ESG topics and stakeholder groups requires companies to consider ESG-related risks and business opportunities across all functions, maintaining a close interaction between internal stakeholders like the CEO, CFO, CIO, etc.

ESG and the Trusted Use of Technology: The Case of Responsible AI

The use of artificial intelligence (AI) provides a great example of how ESG is connected to the various aspects that define trust in the context of the use of 3rd platform technologies. As IDC’s research shows, end-users of AI technology are concerned about the impact of AI in various areas of their business, e.g., in terms of overall data privacy, regarding the effect on the customer experience (including data privacy, but also fair decision-making processes regarding mortgages and loans, etc.), the employee experience (non-discriminatory hiring decisions), the organization’s overall reputation, and legal compliance.

These topics are directly linked to several ESG issues outline by reporting standards such as SASB (e.g., “customer privacy”, “data security”, and “diversity and inclusion”) and of concern to a variety of stakeholders. Through an ESG lens, decision makers can address this mix of compliance, reputational and ethical issues that constitute trust in a corporate setting and ensure that each of these topics is addressed in a sufficient manner.

ESG approaches do not necessarily address new phenomena, and digitalization and new technologies expose companies to issues that might have already been prevalent in other forms. But an ESG view helps companies specify, quantify, and assess their ESG-specific risks, identify opportunities for strategic differentiation, and capitalize on this process by turning formerly “non-financial” topics into financially material business issues and “trust” into a critical revenue factor. All of this should then constitute a compelling business case for Responsible AI and Data Ethics solutions that goes beyond the mere purpose of “doing good”.

Want to learn more about increasing trust and accountability through Responsible AI? Join our free webinar on Thursday, March 4 at 12pm ET. Reserve your spot: