STAY TUNED for a new post from IDC’s Worldwide Infrastructure Systems, Platforms, and Technology Groups in Fall 2021.

As building Artificial Intelligence (AI) capabilities becomes increasingly urgent, IDC sees that businesses are confused about the process of building their own AI infrastructure stack. IDC is seeing a growing number of AI server, storage, and processor vendors develop AI stacks that consist of abstraction layers, orchestration layers, AI development layers, and data science layers that are intended to seamlessly operate together.

These stacks typically combine open source software, proprietary software, and nonmonetized commercial software (such as CUDA) layers that are intended to help customers’ IT infrastructure teams, developers, and data scientists collaborate on a predesigned stack without having to build it themselves.

IDC believes that AI infrastructure stacks provide a clear advantage to customers and that their variety is, while confusing, not a disadvantage. IDC does not expect vendors to collaboratively develop a “standard” AI infrastructure stack – this would defeat the advantage for customers of having multiple flavors to choose from. By offering an AI Plane (AIP) framework, IDC hopes to provide a guide for IT vendors, encouraging them to improve the versatility of their stack, thereby increasing its ubiquitous adoption.

What are Artificial Intelligence Workloads?

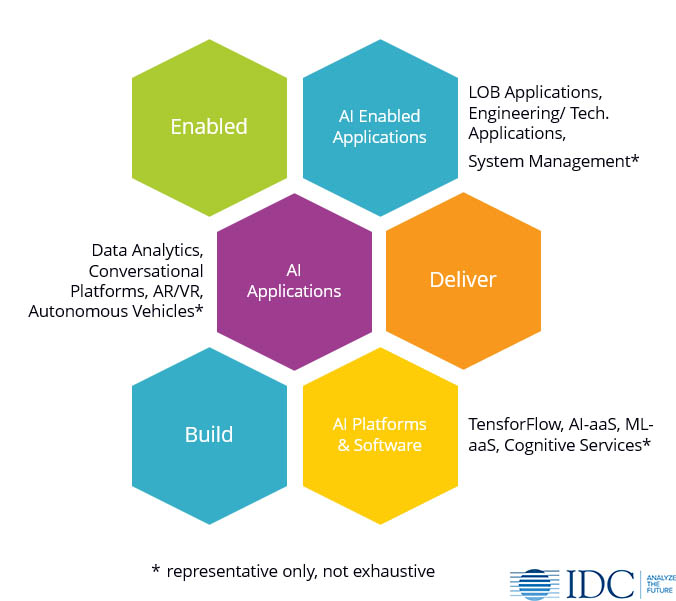

IDC classifies AI workloads into following categories based on whether they are used to build or deliver, or they are enabled by AI capabilities: AI software and platforms, AI applications, and AI-enabled applications.

AI Platforms or Software

This category of AI workloads refers to the tools and platforms used to build or implement AI capabilities. Common AI platforms include ML/ DL frameworks (TensorFlow, PyTorch, etc.), Azure Machine Learning Service, Amazon Machine Learning Service, Google Cloud AI Platform, and cognitive services such as Amazon Rekognition, IBM Watson Text to Speech, Microsoft Conversational Kit, etc.

AI Applications

This category refers to workloads that are used to deliver AI capabilities and in which AI technologies/algorithms are central to their functionality. In other words, the application cannot function as intended without AI capabilities. Representative AI applications include conversational assistants (x.ai, Google Contact Center AI, Oracle Digital Assistant, etc.), intelligent automation (UiPath, Automation Anywhere), recommendation engines (Oracle AI Offers, Pega Next Best Action), and autonomous vehicles software (Waymo’s AI software, Tesla Autopilot software, etc.).

AI Enabled Applications

This category refers to workloads enhanced and/or enabled by AI capabilities. In an AI-enabled application, the application vendor enhances the functionality of an existing application by introducing new AI capabilities without altering the core functionality or behavior. If AI technologies were removed from an AI-enabled application, they would still be able to function, possibly less effectively.

Artificial Intelligence Workloads:

AI Plane and AI Stacks

AI workloads have specific requirements from the underlying infrastructure, which can be summarized into three key dimensions:

- Scale. AI workloads need massive scale compute and huge amounts of data. The AI infrastructure needs to be able to support such scale requirements

- Portability. This is the ability of the workload to be moved across core, edge, and endpoint deployments. While current AI applications are mostly static in nature, more customers are looking at developing workloads in public cloud and deploying at another location (e.g., edge). The AI infrastructure should be able to support such portability of workloads.

- Time. AI workloads are compute-intensive and ‘batchy’ in nature. That is, they require large scale of computing resources for batches of time. Increasingly — and thanks to the proliferation of high-performance accelerators — AI workloads are able to analyze streaming data in a real-time or near-real-time manner. The AI infrastructure should support such requirements as well.

In the report, The “AI Plane”: An Interoperable Framework for Artificial Intelligence Infrastructure Stacks, IDC introduces AI Plane (AIP)—an interoperable framework to select the right infrastructure stack to power AI workloads.

AI Plane (AIP)

The AI Plane (AIP) describes various components of the infrastructure layer required to support AI applications. IDC recommends that enterprises leverage the AIP framework when selecting an appropriate infrastructure stack to power AI workloads. It is IDC’s intent that this AIP framework serve as a guide for IT vendors, encouraging them to improve the versatility of their stack and thereby increasing its ubiquitous adoption.

As history has shown us, vendor attempts at finding common ground benefit the industry at large while preserving vendor-to-vendor differentiation. The following figure summarizes the layers of AI plane with constituent components.

The report also introduces two specific implementations of AIP: Open AI Plane and As-a-Service AI Plane. Open AI Plane is an implementation based on open source tools and options. As-a-service AI Plane is an implementation based on as-a-service offerings provided by public cloud service providers. For more details, please check the report.

Vendor Stacks

IDC believes that the AI infrastructure stacks that vendors are developing have provided a boost to businesses’ ability to get started with AI. It has allowed them to focus on the actual model development rather than on installing the virtualization, containerization, orchestration, frameworks and libraries, data science tool kits, and so on that they need to run their AI development. The fact that many vendors are creating such stacks furthermore provides businesses with a welcome choice.

IDC is seeing an increasing number of AI server, storage, and processor vendors develop AI stacks that consist of abstraction layers, orchestration layers, AI development layers, and data science layers that are intended to seamlessly operate together. These stacks typically combine open source software, proprietary software, and nonmonetized commercial software (such as CUDA) layers that are intended to help customers’ IT infrastructure teams, developers, and data scientists collaborate on a predesigned stack without having to build it themselves.

The IDC report, AI Infrastructure Stack Review H1 2020: The Rapid and Varied Rise of the AI Stack (IDC #US46291620), assesses some of the AI infrastructure stacks that are currently available on the market, including the stacks offered by Intel, AMD, NVIDIA, Cisco, Huawei, NetApp, and HPE. For a complete discussion of these stacks, please check the report. While such stacks are differentiating themselves in how they are enabling customers, they may cause confusion and a lack of interoperability.

IDC recommends technology buyers to thoroughly investigate the entire AI stack that server vendors offer IT benefits to keep in mind when examining reference stacks include reduced costs, data and application availability, effective infrastructure consolidation and, where possible, a single interoperable application delivery platform. IDC recommends leveraging the AI Plane as a framework for IT buyers to assess and determine interoperability among different AI stacks. IDC also recommends technology vendors to focus on interoperability among AI infrastructure stacks.

Learn more about IDC’s AI stack framework and correlating research, highlighted in our recent press release:

Peter Rutten, Research Director, IDC's Enterprise Infrastructure Practice, also contributed to this piece.