The current CPU (central processing unit) centric architecture has been the de facto model for all computing platforms regardless of their size and usage, deployed in datacenter or edge deployment or consumer handhelds. In this computing model, the CPU maintains centralized control of most – if not all – of the key hardware and software functionality delivered by the computing platform itself.

Much like the human brain, the CPU also assumes all responsibilities for the integrity of the mathematical calculations that are done on that platform. It is the supreme commander of the integrity of the data that enters and leaves the platform – whether it is in flight (in memory) or at rest (on a persistent medium that is accessible to the platform).

Extensions to deliver a subset of this platform as a fully SLA covered system, by adding specific operating system, hypervisor and applications (examples: an external storage system, a security appliance like a firewall, or a hyperconverged appliance) rest on the fact that the CPU of the computing platform is delivering outcomes as expected. The integrity of applications and data hosted in a datacenter or in the cloud depends on the computing architecture of a server, storage and networking infrastructure. The more centrally placed this system (e.g. a server in a public cloud, or a server used in a virtualization cluster), the more crucial this situation as it can impact several businesses or tenants simultaneously. Any compromises to the integrity of the CPU internal to scaling or operations have an upstream effect on the platform, and the software and data that is hosted on it.

This computing model has started to show its limitations in the era of hyperscale, multi-tenant and shared everything computing. As the recent vulnerabilities such as Spectre and Meltdown have shown, poison any part of this CPU subsystem with low-level, hardware-centric attacks and you have a corrupted system – and nothing can be done about it unless the CPU itself is removed from the critical path. Platform integrity must be as important to the infrastructure as other operational service level objectives such as reliability, availability, and serviceability. Further, any solution to mitigate, bypass or completely overcome the inherent flaws of the current computing platform architecture deployed in most servers, storage and networking systems today must be linearly scalable at the very minimum. Finally, the entire hardware stack should be tightly coupled with the operating system environment to deliver a continuously and consistently enforceable security paradigm across the entire infrastructure.

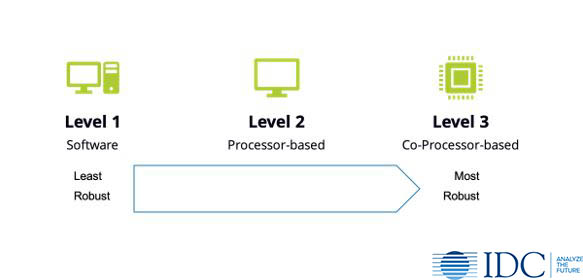

There are three fundamental approaches to preserving platform integrity:

Level 1: Software-Based

Historically, much of the handling and mitigation of challenges associated with the computing platform – including but not limited to the mitigation of hardware issues – has been dealt with via software. Most operating system and hypervisor vendors need to be intimately familiar with the supported hardware platform, and therefore get first dibs on vulnerabilities before they are publicly outed. This enables them to release patches and updates that can limit the damage.

Such patches no doubt offer a layer of protection – an immediate patch to mitigate the situation in the short-term – but can still leave the server infrastructure vulnerable to attacks from individuals with access to critical portions of the server, e.g. firmware. For example, some or several x86-based instructions-set-based security solutions are limited in scope and require changes to the operating system, virtualization layer and application stack. They often come with a significant performance penalty, forcing operators into having to choose between desired performance to meet increasing SLAs and adequately securing their clients important data.

As US-CERT’s Vulnerability Note (VU#584653) says: “the performance impact of software mitigations may be non-trivial and therefore may become an ongoing operational concern for some organizations” and “Deployment contexts and performance requirements vary widely and must be balanced by informed evaluation of the associated security risks.”

Level 2: Processor-Based

One approach is to deal with the CPU integrity issues is to create mitigation and bypass capabilities within the processor subsystem itself. Processor integrity can be elevated to the same stature of other security-related datacenter considerations via a fundamental shift in processor design. Processor design – as sophisticated as it has gotten in recent times – should be multi-dimensional and designed with modern multi-tenant, highly virtualized and cloud-native deployments in mind. Advanced security features embedded deep into the silicon can help improve execution integrity. Further the processor subsystem should be able to cryptographically secure data at rest and data in use (i.e. transient data stored in memory).

AMD for example has built advanced security features into the core architecture of its x86-based EPYC processor platform, and further enhanced these features for the 2nd generation EPYC platform launched in August 2019. Security capabilities of the AMD EPYC family of processors are managed by a silicon-based embedded security subsystem. This helps businesses safeguard their data in transit and at rest. These processors can cryptographically isolate up to 509 virtual machines per server using AMD Secure Encrypted Virtualization (SEV) with no application changes required.

For this solution to work effectively at scale, the operating system and/or the hypervisor stack must support these extensions. It must provide deep integration with the processor capabilities. And even with these capabilities in place it is likely that sloppy key management can lead to compromises.

Level 3: Coprocessor-Based

An alternative approach is to build a coprocessor on the computing platform that takes over several of the core processor functions. Crucially, it strips away essential low-level security, networking and storage functionality from the central processor making it nearly impossible for any higher-level network, disk/flash or memory-based software code to exploit low-level hardware vulnerabilities.

Conversely software-defined computing is also becoming “coprocessor friendly” – the use coprocessors to intercept, proxy and software-enable hardware calls made by operating systems, hypervisors and container hosts is a new trend that IDC sees gaining fast and strong adoption in the market. A complementary benefit of this approach is that it reduces CPU overhead allocated for these functions, allowing more of the CPU to be allocated to customer/payload application(s).

This approach while common in hyperscalers (operators with a large-scale datacenter infrastructure footprint) is not unique to such deployments. In fact, such architectures have been used in consumer devices to protect consumer information. For example, the Apple T2 co-processor used on some Mac models delivers a similar solution. Server vendors have also used similar approaches to deliver silicon root of trust and out-of-band management capabilities.

However, the latter approaches do not intercept code execution on the processor, nor do they require recoding of the operating system or hypervisor stacks (it is entirely optional for the software stack to make use of such functionality if enabled by the vendor). They stay out of the picture once the boot sequence has completed and have no visibility into the processor runtime environment.

The need for such a solution in the datacenter is implemented mostly in the hyperscale domain. Public cloud services such as Amazon Web Services (AWS) are leading the pack in deploying solutions at scale. This is primarily because the service providers have tight control over the hardware design and supply chain (i.e. direct relationships with systems/motherboard manufacturers as well as component suppliers) as well as the operating system environment (including the software-defined hypervisor that is part of it). It is likely that other leading digital and cloud services providers like Facebook, Microsoft, Oracle, IBM, Facebook and Google are working on or have deployed something similar though at least as far as public knowledge goes, they appear to be largely reliant on traditional virtualization.

Introducing Function Offload Coprocessors (FOCPs)

In coprocessor-based approaches, the platform promotes one or more coprocessors into the platform architecture to take over some of the privileged operations from the CPU. Such coprocessors are also known as function offload coprocessors (FOCPs in short). FOCPs are designed to:

- Operate independently of the CPU, and outside the control of main processing system from a code execution perspective. In other words, the CPU is aware of their presence but cannot control them.

- Introduce an additional abstraction layer in the bootup and operational state of the platform. They boot up using their own independent microcode, firmware or a lightweight hypervisor that treats the CPU subsystem as one big “virtual machine” running in a reduced privilege mode.

- Control access to physical resources like data persistence and network interfaces through which sensitive data can be accessed. Any payload executed on the CPU including the kernel itself that needs access to these resources must go through the function offload interfaces – which is presented in a software-defined manner to the operating system environment running on the CPU.

- Take over direct execution of crucial portions of the embedded and management payload functions such as security, hypervisor root partition, virtual networking etc. Such kernel space functions are and/or require the process to run in a privileged mode on the host operating system.

IDC considers FOCPs to be a segment of the function-specific accelerated computing segment – estimated to be a $28.4 billion market in 2024. which is itself part of the accelerated computing market – are here to stay. Service providers and enterprises building their own infrastructure will be able to utilize FOCP-based platforms in building out their infrastructure to make it secure, tamper-proof and highly scalable. They will be able to not just offload embedded (kernel-level) functions on to these FOCPs, but eventually also management functions (think Kubernetes running out of band on an FOCP). This is truly revolutionary as it means that the FOCP – and not the CPU – is in charge of the system, and common user space workloads have no way to cross over into the FOCP domain creating a firewall between trusted, privileged and untrustworthy executions.

Learn more about function offload coprocessors with IDC’s new market perspective: